What is Deepchecks?

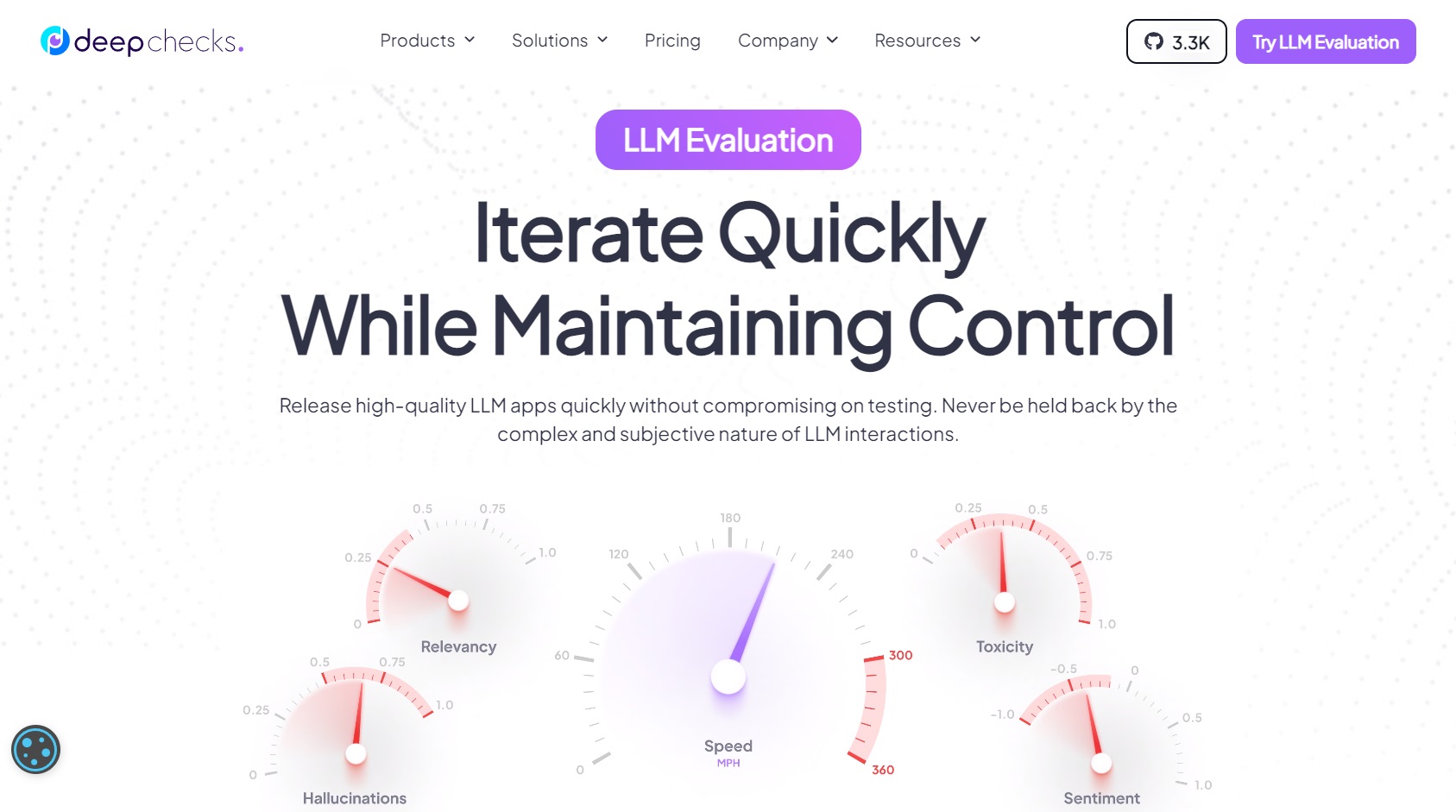

Deepchecks offers a comprehensive solution for evaluating LLM-based apps, addressing the complex and subjective nature of LLM interactions. It allows users to iterate quickly while maintaining control over the evaluation process, which is typically manual labor by subject matter experts.

The platform emphasizes the importance of evaluating quality and compliance in LLM apps, highlighting the need to detect and mitigate issues like hallucinations, incorrect answers, bias, deviation from policy, and harmful content before and after the app goes live.

One key feature of Deepchecks is the concept of a “Golden Set,” which is essential for testing LLM apps. Manual annotation of such sets can be time-consuming, taking 2-5 minutes per sample, making it impractical for every experiment or version candidate.

Deepchecks provides a solution to automate the evaluation process, offering estimated annotations that users can override when necessary. It is an open-core product that is widely tested and robust, offering an open-source ML testing solution for validating machine learning models and data comprehensively with minimal effort in both the research and production phases. It also includes ML monitoring capabilities to ensure continuous validation of models and data for optimal performance.